(Click to watch a clip on the formation experiment for ONR review, November 2002)

UC Berkeley's team has been actively on imagery and the use of computer vision in favor of decreased probability of detection and enhanced situational awareness. Though radar, LIDAR, ultrasonic, and other time-of-flight sensors can provide very accurate estimates of distances, they have several disadvantages. By their nature, time-of-flight sensors rely on self-emitted RF, laser or ultrasonic signals, which adversaries can potentially use to detect, or worse, use to localize by triangulation. Furthermore, active sensing modalities have relatively high rates of power consumption. Visible-light or IR cameras, on the other hand are passive and rely solely on reflected ambient light or heat, thereby lowering the probability of detection as well as reducing power consumption. Factors that make vision-in-the-loop difficult are: achieving the accuracy of active sensors without time-of-flight measurements; high bandwidth and computation subject to operation in real-time; and inherent photogrammetric ambiguities making some computations ill-conditioned. Imagery, however, has a number of benefits besides lower power and passivity. Because of the more descriptive signal, giving color and appearance information, imagery can be used for target recognition and navigation from reference points when operating in unknown environments, as well as enhanced situational awareness.

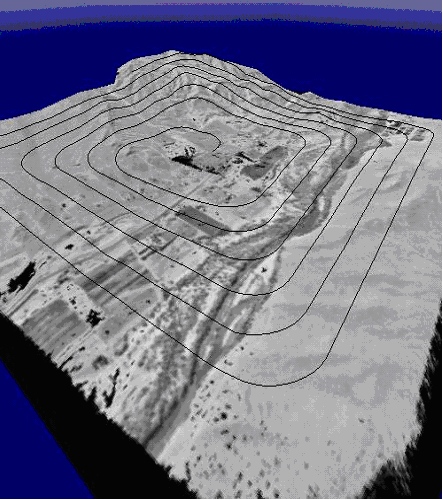

Many landing scenarios demand the ability to land in an arbitrary environment where a landing site has not been previously selected. A natural extension to the former landing project was to eliminate the constraint on requiring a target to land autonomously. Whereas the site for a target would presumably be chosen so as to be safe for landing a helicopter, for example, clear of debris, foliage, etc., these characteristics would have to be autonomously gauged from the air to determine suitability for landing, a problem which is very challenging. It necessitates having the ability to accurately estimate elevation and terrain slope, to reliably classify vegetation and to be able to detect small debris.

As part of the SEC extension program with Boeing, the BEAR team has designed a system to land an autonomous helicopter in an unknown environment [Geyer, CDC 2004] which will be flown on the Maverick platform at the end of May 2005. The system's design was constrained by several factors: real-time operation, accurate terrain estimates, necessitating accurate position and attitude estimates, passivity, and arbitrary terrain. For example, a naive choice might be to use a stereo vision system; error in the estimates of depth from two view, however, increase with the square of depth, thereby prohibiting the use of stereo vision. The resulting system incorporates four major components: fusion of GPS, INS and image measurements for increased accuracy of position, which can operate in environments of unknown terrain; a system that creates digital elevation maps in real-time by integrating image measurements from multiple views (not juts two) using a recursive filter; and the implementation of a site selection strategy based on appearance and estimated elevation maps. The on-board computer continually evaluates and analyzes the observed terrain to determine sites tolerable for landing depending on factors such as the helicopter's landing constraints, desired size of clearing without trees or tall grasses and maximum landing slope. We predict that vision in autonomous systems will revolutionize control systems, like radar helped revolutionize combat in World War II. We firmly believe that autonomous sensing is necessary to achieve this goal, and that computer vision will have to form a major component of many control systems. It must be recognized that sensing by vision is not appropriate for all circumstances: very low light conditions where night vision is ineffective; or in situations where vision cannot demonstrate sufficient accuracy. Furthermore, many challenges need to be overcome, including guaranteed robustness, computational speed. Nevertheless, one cannot help but think that vision is prevalent everywhere in nature.

The SWARMS project's goall is to develop a framework and methodology for the analysis of swarming behavior in biology and the synthesis of bio-inspired swarming behavior for engineered systems. We will be actively engaged in addressing questioins such as: Can large numbers of autonomously functioning vehicles be reliably deployed in the form of a “swarm” to carry out a prescribed mission and to respond as a group to high-level management commands? Can such a group successfully function in a potentially hostile environment, without a designated leader, with limited communications between its members, and/or with different and potentially dynamically changing “roles” for its members?

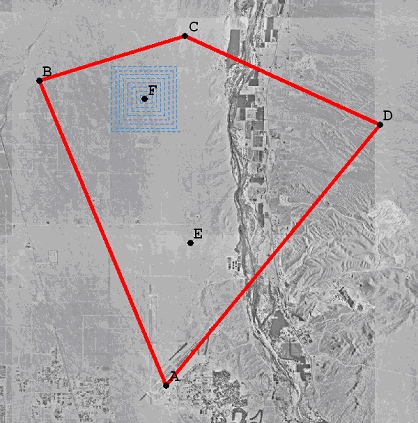

BEAR group has started developing a large number of easily deployable/retrievable fixed-wing platform based on commerically available flying wing aircraft . We will be soon able to demonstrate the swarm-like coordination of SmartBATs as shown in the following image- by providing fully decentralized online optimizing capability to each vehicle, which actively computes a trajectory that will keep the host vehicle in a safe formation to the destination.